A Lane Detection Algorithm Based on Improved RepVGG Network

-

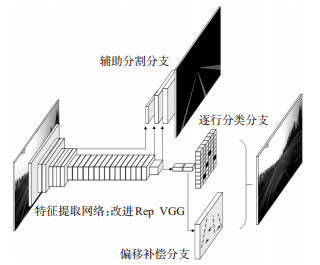

摘要: 为提高自动驾驶系统中车道线检测的速度和精度,提出了基于可解耦训练状态与推理状态的车道线检测算法。在结构重参数化VGG(RepVGG)主干网络中引入注意力机制压缩-激励(SE)模块,增强对重要车道线信息的特征提取;同时设计并行可分离的辅助分割分支,对局部特征进行建模以提高检测精度。采用行方向位置分类车道线检测方式,在主干网络后加入逐行检测分支,减小计算量的同时实现对遮挡或缺损车道线的检测;设计偏移补偿分支,在水平方向上细化局部范围内预测的车道线位置坐标,以恢复车道细节。通过结构重参数化方法解耦训练状态模型,将多分支模型等价转换为单路模型,以提高推理状态模型的速度和精度。对比解耦前后的模型,本研究算法速度提高81%,模型规模减小11%。利用车道线检测数据集CULane对算法进行测试,与目前基于深度残差神经网络的车道线检测模型中检测速度最快的UFAST18算法相比,其检测速度提高19%,模型规模减小12%,评价指标F1 -measure由68.4增长到70.2;本研究算法的检测速度是自注意力蒸馏(SAD)算法的4倍,空间卷积神经网络(SCNN)算法的40倍。通过城区实车实验测试,在拥挤、弯道、阴影等多种复杂场景下车道线检测结果准确稳定,常见场景下车道线漏检率在10%~20%之间。测试结果表明,结构重参数化方法有助于模型优化,提出的车道线检测算法能有效提高自动驾驶系统的车道线检测实时性和准确性。Abstract: To improve the speed and accuracy of lane detection of autonomous driving systems, a lane detection algorithm based on decoupled training and inference state is proposed. The attention mechanism Squeeze-and-Excitation(SE)module is employed in a Structural Re-parameterization VGG(RepVGG)backbone network to enhance the feature extraction of important information from lane lines' imageries. A separated parallelauxiliary segmentation branch is designed to model the local features for improving accuracy of detection. By adopting lane classification detection method in row direction, a row-by-row detection branch is added behind the backbone networkfor reducing calculation burden and realizing detection of shaded or defective lane lines. For restoring details of lane, an offset compensation branch is designed to horizontally refine the predicted position coordinates in partial range. The trained state model is decoupled by the structural re-parameterization method, and the multi-branch model is equivalently converted into a single-channel model to improve the speed and accuracy. Compared with un-decoupled model, the decoupled model's speed increases by 81%, and model size reduces by 11%. The proposed model is tested on the public lane detection data set CULane. It is compared with UFAST18 algorithm, which is the fastest in current lane detection model based on deep residual neural network. The result shows that the inference speed increases by 19%, the model size reduces by 12%, and the F1 -measure increases from 68.4 to 70.2. Its inference speed is 4 times that of the Self Attention Distillation(SAD) algorithm and 40 times that of the Spatial Convolutional Neural Networks(SCNN)algorithm. The actual vehicle experiment test is carried out in an urban area, and theresults of lane detectionare accurate and stable in various complex scenes such as congestion, curves, and shadows. The missingrate of lane detectionin common scenarios is between 10% and 20%. The test results show that the structural re-parameterization method is helpful for the optimization of the model, and the proposed lane detection algorithm can effectively improve the real-time and accuracy of the lane detection of the automatic driving system.

-

Key words:

- autonomous driving /

- computer vision /

- lane detection /

- RepVGG /

- offset compensation

-

表 1 RepVGG特征提取网络结构配置

Table 1. Structure configuration of feature extraction network of RepVGG

阶段 输出大小 阶段首层 阶段其它层 阶段1 144×400 1×(R1-48) 阶段2 72×200 1×(R1-48) 1×(R2-48) 阶段3 36×100 1×(R1-96) 3×(R2-96) 阶段4 18×50 1×(R1-192) 13×(R2-192) 阶段5 9×25 1×(R1-1 280) 表 2 改进RepVGG特征提取网络结构配置

Table 2. Structure configuration of feature extraction network of improved RepVGG

阶段 输出大小 阶段首层 阶段其它层 阶段1 144×400 1×(R1-48) SE 阶段2 72×200 1×(R1-48) 1×(R2-48) 阶段3 36×100 1×(R1-96) 3×(R2-96) 阶段4 18×50 1×(R1-192) 13×(R2-192) 阶段5 9×25 1×(R1-1 280) SE 表 3 推理模型大小对比

Table 3. Comparison of inference model size

推理模型名称 模型大小/MB UFAST18 182 改进RepVGG解耦前 180 改进RepVGG解耦后 160 表 4 推理速度对比

Table 4. Comparison of inference speed

单位: fps 网络模型 平均速度 最高速度 最低速度 UFAST18 253 499 52 改进RepVGG解耦前 167 199 138 改进RepVGG解耦后 302 999 81 表 5 车道线检测算法检测速度对比

Table 5. Comparison of detection speed of lane detection algorithm

单位: fps 表 6 F1-measure对比

Table 6. Comparison of F1-measure

类别 改进RepVGG SAD UFAST18 UFAST34 正常 90.2 89.8 87.7 90.7 拥挤 68.0 68.1 66.0 70.2 夜晚 64.9 64.2 62.1 66.7 无车道线 41.3 42.5 40.2 44.4 阴影 63.9 67.5 62.8 69.3 箭头 83.8 83.9 81.0 85.7 炫光 58.6 59.8 58.4 59.5 弯道 64.7 65.5 57.9 69.5 交叉路口 1 832.0 1 995.0 1 743.0 2 037.0 总体 70.2 70.5 68.4 72.3 -

[1] 裴玉龙, 迟佰强, 吕景亮, 等. "自动+人工" 混合驾驶环境下交通管理研究综述[J]. 交通信息与安全, 2021, 39(5): 1-11. https://www.cnki.com.cn/Article/CJFDTOTAL-JTJS202105004.htmPEI Y L, CHI B Q, LYU J L, et al. An overview of traffic management in "Automatic+Manual" driving environment[J]. Journal of Transport Information and Safety, 2021, 39(5): 1-11. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-JTJS202105004.htm [2] WANG P, XUE J R, DOU J, et al. PCRLaneNet: Lane marking detection via point coordinate regression[C]. 32nd IEEE Intelligent Vehicles Symposium(IV), NAGOYA, JAPAN: IEEE, 2021. [3] 肖进胜, 程显, 李必军, 等. 基于Beamlet和K-means聚类的车道线识别[J]. 四川大学学报(工程科学版), 2015, 47(4): 98-103. https://www.cnki.com.cn/Article/CJFDTOTAL-SCLH201504014.htmXIAO J S, CHENG X, LI B J, et al. Lane detection algorithm based on Beamlet transformation and K-means clustering[J]. Journal of Sichuan University(Engineering Science Edition), 2015, 47(4): 98-103. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-SCLH201504014.htm [4] 王海, 蔡英凤, 林国余, 等. 基于方向可变Haar特征和双曲线模型的车道线检测方法[J]. 交通运输工程学报, 2014, 14(5): 119-126. https://www.cnki.com.cn/Article/CJFDTOTAL-JYGC201405019.htmWANG H, CAI Y F, LIN G Y, et al. Lane line detection method based on orientation variance Haar feature and hyperbolic model[J]. Journal of Traffic and Transportation Engineering, 2014, 14(5): 119-126. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-JYGC201405019.htm [5] PAN X G, SHI J P, LUO P, et al. Spatial as deep: Spatial cnn for traffic scene understanding[C]. 32nd AAAI Conference on Artificial Intelligence, New Orleans, Louisiana, USA: AAAI, 2018. [6] NEVEN D, BRABANDERE B D, GEORGOULIS S, et al. Towards end-to-end lane detection: An instance segmentation approach[C]. 29th IEEE Intelligent Vehicles Symposium(IV), Changshu, China: IEEE, 2018. [7] 蔡英凤, 张田田, 王海, 等. 基于实例分割和自适应透视变换算法的多车道线检测[J]. 东南大学学报(自然科学版), 2020, 50(4): 775-781. https://www.cnki.com.cn/Article/CJFDTOTAL-DNDX202004023.htmCAI Y F, ZHANG T T, WANG H, et al. Multi-lane detection based on instance segmentation and adaptive perspective transformation[J]. Journal of Southeast University (Natural Science Edition), 2020, 50(4): 775-781. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-DNDX202004023.htm [8] LI X, LI J, HU X L, et al. Line-cnn: End-to-end traffic line detection with line proposal unit[J]. IEEE Transactions on Intelligent Transportation Systems, 2019, 21(1): 248-258. [9] 田晟, 张剑锋, 张裕天, 等. 基于扩张卷积金字塔网络的车道线检测算法[J]. 西南交通大学学报, 2020, 55(2): 386-392+416. https://www.cnki.com.cn/Article/CJFDTOTAL-XNJT202002020.htmTIAN S, ZHANG J F, ZHANG Y T, et al. Lane detection algorithm based on dilated convolution pyramid network[J]. Journal of Southwest Jiaotong University, 2020, 55(2): 386-392+416. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-XNJT202002020.htm [10] QIN Z Q, WANG H Y, LI X. Ultra fast structure-aware deep lane detection[C]. 16th European Conference on Computer Vision (ECCV), Glasgow, UK: Springer, 2020. [11] YOO S, LEE H S, MYEONG H, et al. End-to-end lane marker detection via row-wise classification[C]. 2020IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops(CVPRW), Seattle, WA, USA: IEEE, 2020. [12] 邓天民, 王琳, 杨其芝, 等. 基于改进SegNet算法的车道线检测方法[J]. 科学技术与工程, 2020, 20(36): 14988-14993. https://www.cnki.com.cn/Article/CJFDTOTAL-KXJS202036031.htmDENG T M, WANG L, YANG Q Z, et al. Lane line detection method based on improved SegNet network algorithm[J]. Science Technology and Engineering, 2020, 20(36): 14988-14993. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-KXJS202036031.htm [13] ROMERA E, ALVAREZ J M, BERGASA L M, et al. Erfnet: Efficient residual factorized convnet for real-time semantic segmentation[J]. IEEE Transactions on Intelligent Transportation Systems, 2017, 19(1): 263-272. [14] DING X H, ZHANG X Y, MA N N, et al. Repvgg: Making vgg-style convnets great again[C]. 2021IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Nashville, TN, USA: IEEE, 2021. [15] HE K, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition[C]. 2016IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Las Vegas, NV, USA: IEEE, 2016. [16] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]. International Conference on Learning Representations(ICLR), San Diego, CA, USA: ICLR, 2015. [17] HU J, SHEN L, SUN G. Squeeze-and-excitation networks[C]. 2018IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA: IEEE, 2018. [18] DENG J, DONG W, SOCHER R, et al. Imagenet: A large-scale hierarchical image database[C]. 2009IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA: IEEE, 2009. [19] CHEN Z P, LIU Q F, LIAN C F. Pointlanenet: Efficient end-to-end cnns for accurate real-time lane detection[C]. 30th IEEE intelligent vehicles symposium(IV), Paris, France: IEEE, 2019. [20] HOU Y N, MA Z, LIU C X, et al. Learning lightweight lane detection cnns by self attention distillation[C]. IEEE/CVF International Conference on Computer Vision(ICCV), Seoul, Korea(South): IEEE, 2019. [21] SU J M, CHEN C, ZHANG K, et al. Structure guided lane detection[C]. 30th International Joint Conference on Artificial Intelligence, Montreal-themed Virtual Reality: IJCAI, 2021. [22] TABELINI L, BERRIEL R, PAIXAO T M, et al. Keep your eyes on the lane: Real-time attention-guided lane detection[C]. 2021IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Nashville, TN, USA: IEEE, 2021. -

下载:

下载: